Have you ever noticed the metrics shown in the “free -m” on the compute node, and the output of “Nova hypervisor-show ” are not in sync?

Let see the reason behind “out of sync”!

The “Used_now” value shown in the “$nova hypervisor-show” output is the cumulative RAM blocked by the nova-compute service for the VM provisioned in that specific hypervisor (compute node) so far.

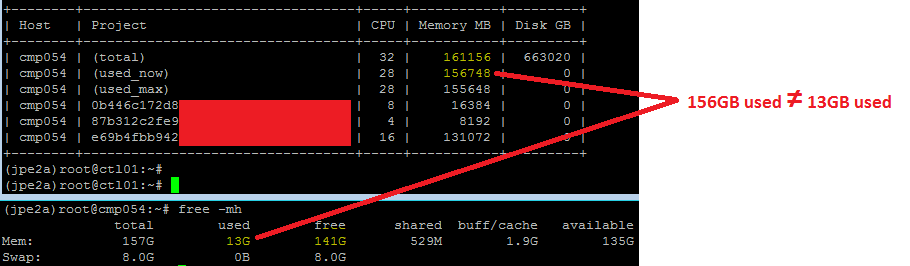

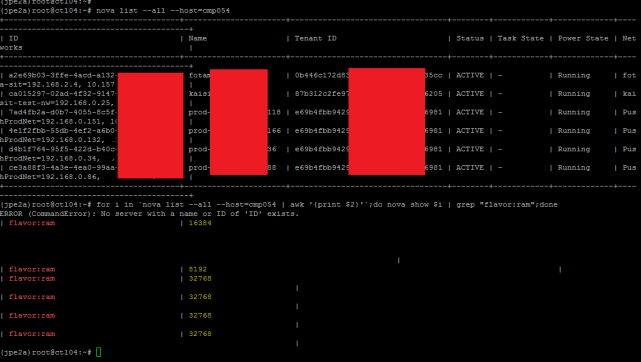

For example, let consider, Compute node [cmp054] which has total 161GB of physical RAM installed on it. As per the figure 1.1 attached below, we have 6 active running VMs reside on the same compute node cmp054.

The flavor size allocated for each VM is as follows,

VM1 – 16GB,

VM2 – 8GB,

VM3 – 32GB,

VM4 – 32GB,

VM5 – 32GB,

VM6 – 32GB,

———————

Total – 152GB

To avoid complexity, let assume the ram_allocation_ratio = 1.0 for our environment.

So considering the fact “ram_allocation_ratio” is set to 1.0 in nova.conf file, nova-compute service on cmp054 node would have already blocked 152GB of RAM out of 161GB available RAM for the existing active VMs on the cmp054 node. Now, when you try to provision a new VM with more than 9GB RAM, the Nova scheduler will find the cmp054 node invalid and throw error message stating, the cmp054 node has not enough resources available.

Notably, the RAM utilisation details that you witness from “free -m” command will give you the actual RAM utilisation on that compute node irrespective of RAM allocated for the guest VMs.

In our case, “free -m” on cmp054 node shows, we have 14GB of RAM used (Refer screenshot 1.2 attached above). However, the Nova-resource_tracker on nova-compute log reporting that we have used 152GB of RAM(The same value that we see in $Nova hypervisor-show command). This is since the 6 active customers VMs provisioned with the big flavor of RAM but not running any heavy workload in it. So the actual RAM usage on host OS (compute node) remains low as 14GB.

Hence, the used RAM reported by the “free -m” and “Nova hypervisor-show” command NEED NOT be same.

P.S:-

OpenStack allows you to overcommit CPU and RAM on compute nodes. This will enable you to increase the number of instances running on your cloud at the cost of reducing the performance of the instances.

Ref:- https://docs.openstack.org/arch-design/design-compute/design-compute-overcommit.html

Regards,

Vinoth Kumar Selvaraj

Leave a Comment